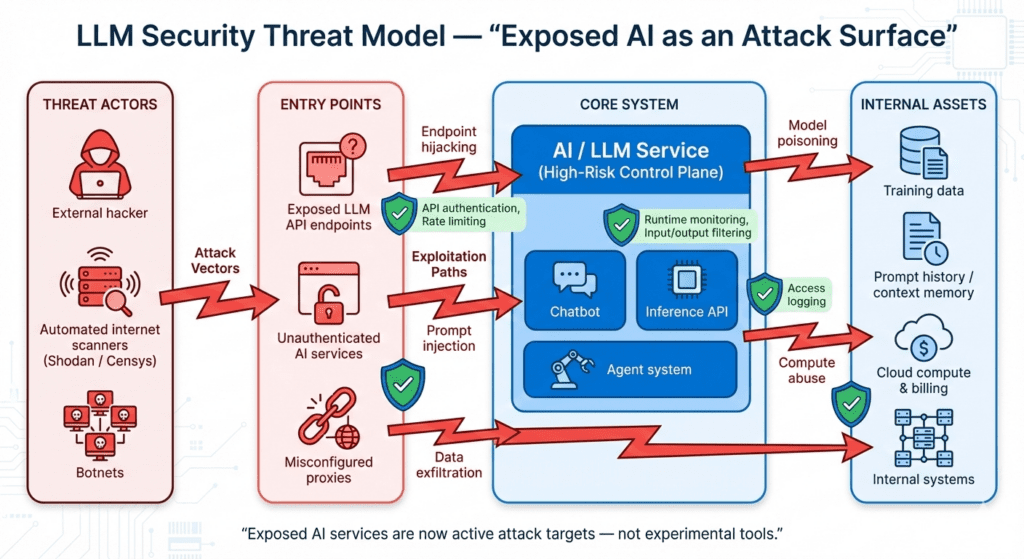

Large Language Models (LLMs) power modern AI services but in 2026, threat actors are increasingly turning these systems into a weapon, not just a target. Recent research uncovered Operation Bizarre Bazaar, a campaign that systematically scans and hijacks exposed LLM endpoints.

What’s Happening?

Cybercriminals are scanning for misconfigured or unauthenticated LLM endpoints including self-hosted APIs, staging environments, and open chatbots within hours of appearing on internet scanners like Shodan or Censys. Once accessed, these endpoints are abused for computing power, data exfiltration, and even lateral access into broader systems.

Why It Matters

The exploitation of LLM services presents a unique risk profile:

- Resource Theft: Inference costs cloud billing spikes and unauthorized usage.

- Data Leakage: Conversation context and prompt history can contain sensitive information.

- Attack Pivot: Compromised LLM systems can become a beachhead into private networks.

Other LLM-Related Attack Vectors to Watch in 2026

Beyond exposed endpoints, security researchers and industry forecasts highlight a broader set of AI-targeted threats:

- Misconfigured Proxy Abuse: Automated scanning exploits open proxies to access LLM services more than 90,000 attempts were logged between late 2025 and early 2026.

- Prompt Injection and Jailbreaking: Attack techniques that manipulate model logic to execute unauthorized instructions or reveal sensitive data.

- Backdoor and Model Poisoning Attacks: Emerging research shows persistent triggers and backdoors can traverse LLM workflows.

- Autonomous Agent Exploits: Inter-agent threats and tool misuse are surfacing in production environments.

Mitigation Strategies

To defend against these threats:

✔ Inventory ALL AI endpoints and audit for exposure.

✔ Enforce strong authentication on AI APIs and proxies.

✔ Implement runtime monitoring for anomalous usage patterns.

✔ Apply model-specific hardening (e.g., denial/response filters).

As AI becomes embedded in business infrastructure, treating AI systems as part of your cyber risk surface not just a feature is essential.

Hacker Simulations helps organizations uncover these blind spots before attackers do. Through realistic attack simulations and AI-focused penetration testing, we show how LLMs and AI pipelines behave when they’re targeted by modern threat actors so you can strengthen defenses based on evidence, not assumptions.

If your organization is deploying or relying on AI in 2026, now is the time to test it like an attacker would.

Talk to Hacker Simulations and understand your true AI security posture—before it’s tested for real.